Physics for AR Engine

Organization: Meta Reality Labs

Time: 01.2023 - 06.2023

Project team: Sangwoo Han (Design Lead), Cameron Sylvia (Product Manager), Rusty Koonse(Engineer)

0. What is AR Engine?

AR Engine is a software framework driving mixed reality experiences across multiple devices and platforms at Meta. Supported by a team of over 20 engineers, AR Engine collaborates closely with partner product teams to develop core capabilities such as rendering, spatial recognition, sound, and animations.

As the design lead for AR Engine, my main role is to guide designers and engineers from partner teams in implementing these features through workshops, prototyping, and best practices.

1. Project goals

The engineering team had been working on adding physics capabilities to AR Engine as part of an effort to transition the platform from supporting mobile experiences (such as Instagram filters) to powering AR Glasses (Orion). With this new capability enabled, the project began with an open question: What meaningful user value can physics bring to the Orion platform?

To navigate this challenge, I established three primary goals:

- Organize workshops to generate ideas and narrow them down to a few prototype concepts.

- Showcase the design strategy by building a working prototype on Spark using the WIP library (JavaScript).

- Share the design strategy with Orion’s product teams, assist with technology integration, and collaborate on best practices.

2. Design prototype

To generate a wide range of promising ideas, I conducted multiple rounds of workshops with stakeholders, using specific frameworks to guide the discussions.

To narrow down the ideas, I defined a set of criteria—or "razors"—based on project goals and technical feasibility. The prototype needed to meet the following standards:

- Solve real problems: The prototype should demonstrate how physics can provide meaningful solutions to real-world problems on the Orion platform.

- Be inspiring: For the technology to be adopted, the prototype must go beyond a simple tech demo. It should spark new conversations and collaborations, paving the way for additional features, design guidelines, and best practices.

- Be lightweight: Physics processing is resource-intensive in AR, especially with Orion’s UX model where multiple virtual objects coexist. To avoid overloading the system, the prototype should limit physics elements to only a few key components.

One use case that emerged was an e-commerce demo allowing users to virtually try out physical products. This demo was intentionally lightweight, with only a few physics-enabled elements. The result:

the demo was published to the internal content library, and I collaborated with the entertainment and utility teams to further explore its implementation.

3. Additional problem - tooling

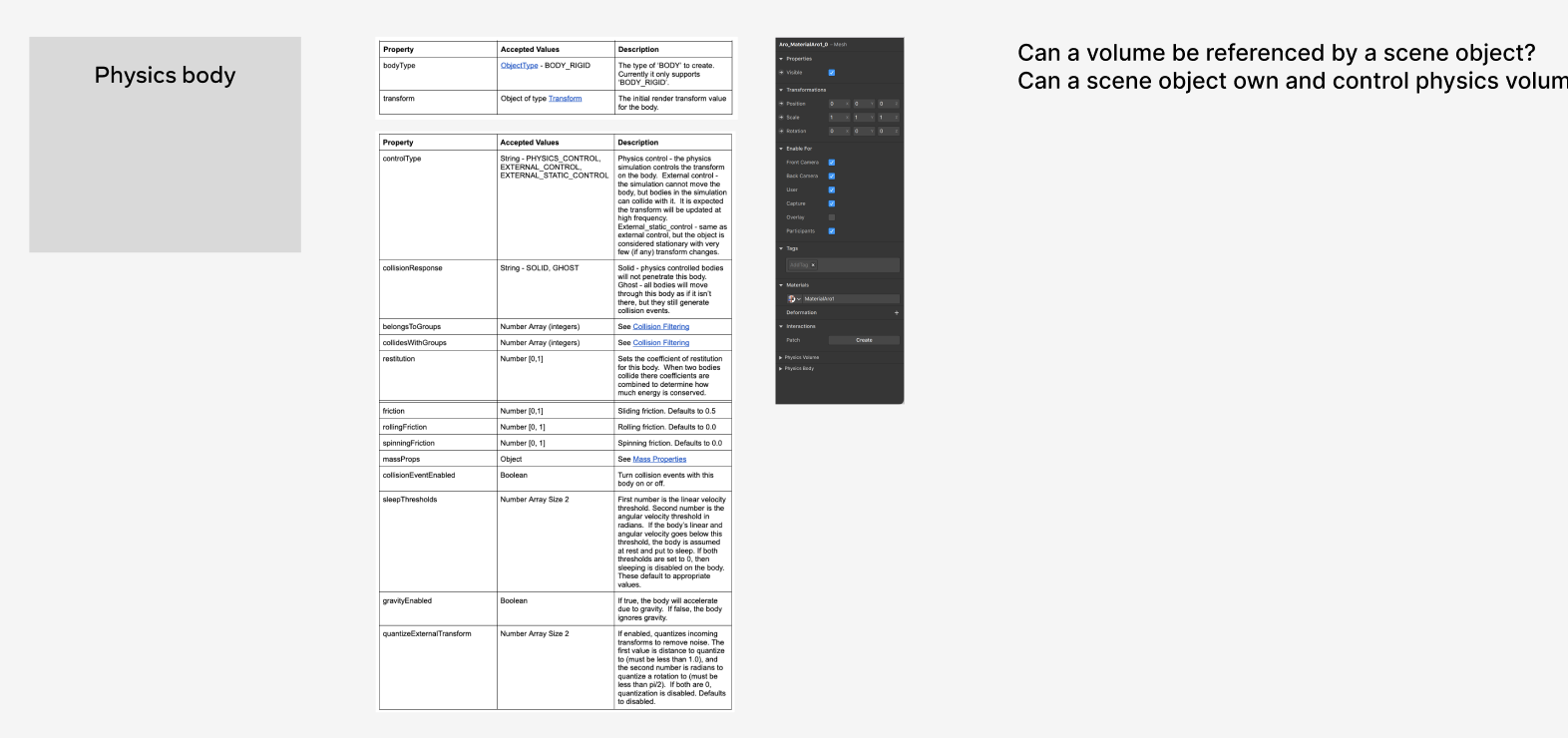

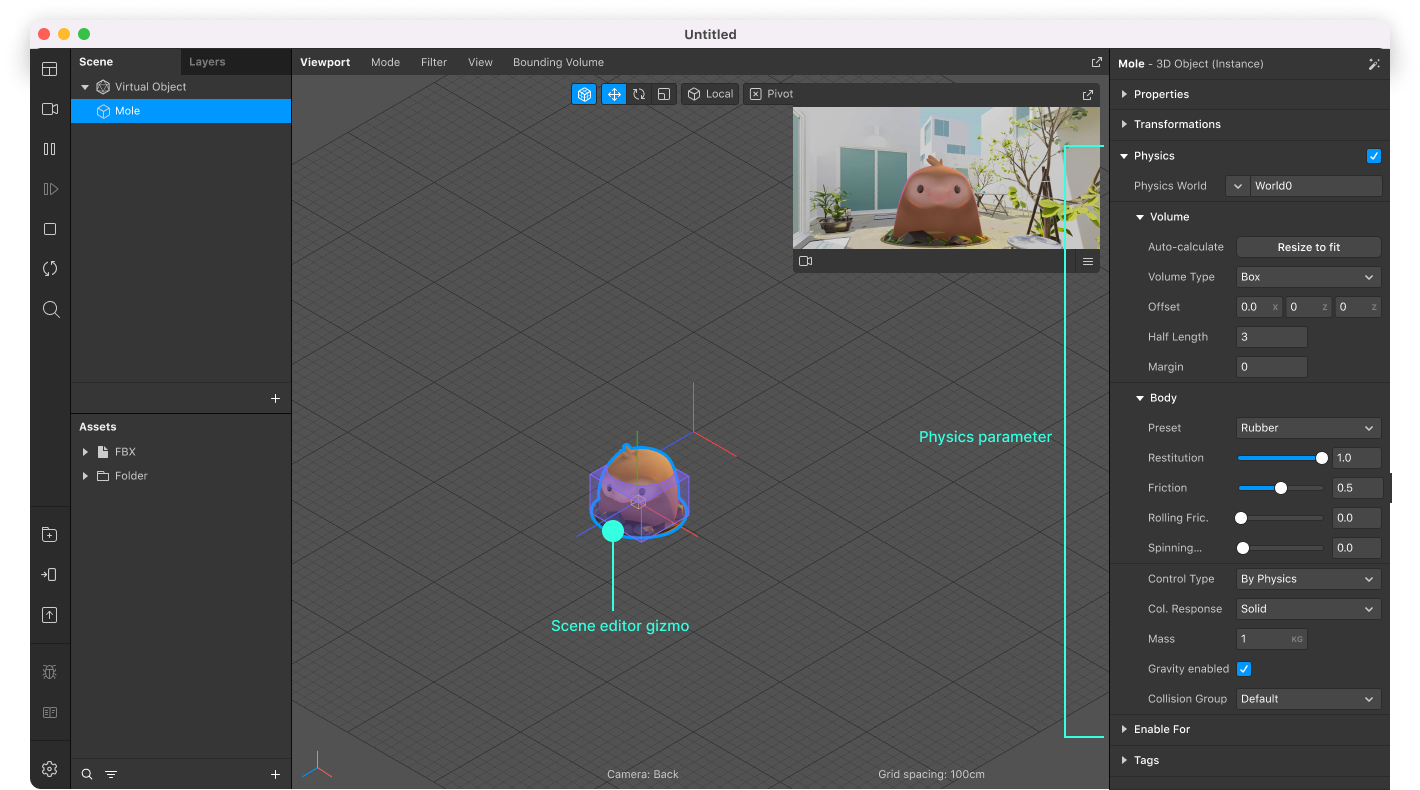

While working on the prototype, I identified a major issue with the new API design affecting the workflow for Spark creators. Similar to other 3D engines like Unity or Unreal, Spark has two complementary development environments: visual editing and scripting.

Typically, a Spark creator begins by building a 3D world in the Visual Editor, then moves into scripting to add advanced interactions and animations, as outlined below.

However, because the physics API was developed in isolation by the AR Engine team, its approach did not align with the existing principles. An experienced Spark creator would expect to add physics to scene objects as a child parameter, like this:

const sceneBall = await Scene.root.findFirst("Ball");

...

sceneBall.physics.body.controlType = Physics.controlType.PHYSICS_CONTROL;

In contrast, the physics APIs developed by the AR Engine engineers were packaged in a separate class. As a result, creators had to create invisible rigid body objects, like this:

let physicsBall = world.create(

{

name: "Bouncy Ball",

type: "BODY",

bodyType: "BODY_RIGID",

controlType: "PHYSICS_CONTROL",

collisionResponse: "SOLID",

restitution: 0.9, // Bouncy!

massProps: {

mass: 0.1,

},

sleepThresholds: [0.8, 1.0], // Linear then angular velocity sleeping thresholds

belongsToGroups: [0],

collidesWithGroups: [0],

collisionEventEnabled: false,

collisionVolume: collisionBall.id,

transform: {

translation: [0, 0.25, 0.7],

rotationQuaternion: [0, 0, 0, 1],

},

});

And then synchronize the position and the scale of the scene objects with the invisible rigidbody through script.

sceneBall.transform.translation = physicsBall.transform.translation;

This API design not only makes creating physics bodies incredibly difficult but also makes UI design for the Visual Editor nearly impossible. To address this, I took the initiative to design the user experience for Spark’s Visual Editor in collaboration with the Spark design team. The project I proposed had two main objectives:

- Enable physics for the Spark creator community in a way that integrates seamlessly with the existing workflow and mental model.

- Bring the resulting design model back to the AR Engine team to inform the API redesign.

A key design challenge was categorizing physics parameters and defining their behaviors to ensure the features could be accessed intuitively by creators.

Examples of studies for categorization

The following final designs were shipped, and the api designs were updated accordingly.

4. Impact

The project had largely three outcomes.

-

Initiated smaller-sized AR game projects inspired by the Mini basket ball demo

-

Validated e-commerce use cases in the perspective of ease-of-development for 3rd parties

-

Delivered the appropriate tools for Spark Studio, including Visual editor, patch editor, and scripts

-

The physics api was updated based on the mental model I created to the visual editor