AR Glasses interaction prototype

Personal project

Time : Oct. 2020

1. Inspiration

Hololens’ gesture input interactions leverage the hardware's robust sensors to allow users to directly interact with computer-generated objects that are virtually placed in the real world with bare hands. The fact that users can see and touch these virtual objects as if they are as tangible as the real objects seen in their mixture opens up the door to the ideal world where every interface is freed from screens and becomes invisible. I believe that's what 'augmenting reality' truly means; it is about empowering us as a human being, who has become the resident of both physical and virtual worlds in the past 30 years by merging these two. It is about distributing information and meanings that have been only able to present in monitors to where they belong.

The interaction models that have been offered by the current AR headset devices work great, although there is still room to improve. This particular interaction demo aims to suggest an improvement to it in the context stated above.

2. Everything has to be in the field of view

Currently, the gesture detection technology in the most AR headsets relies on the headset device itself, which causes a few problems.

1. User has to raise their hands to the level of the eyes

Since all the virtual objects would be displayed in the field of view, users intensionally would raise their hands to the front to have them recognized by the device and interact with the virtual objects. From my previous explorations on the wearable smartwatch, this is an unnatural, tiring gesture that users would consider it as an intentional performance rather than an intuitive interactive experience. A good example is Hololens' Bloom. As much as it looks magical to summon a contextual menu with this gesture, it is often awkward, slow, and takes efforts to perform successfully.

2. Hand gesture control is on the heavy-duty

In the current interaction paradigm of AR headset, the users' are burdened with executing both targetting and interacting with their hand movements. Let's take a quick look at the following two scenarios taking place in the near future.

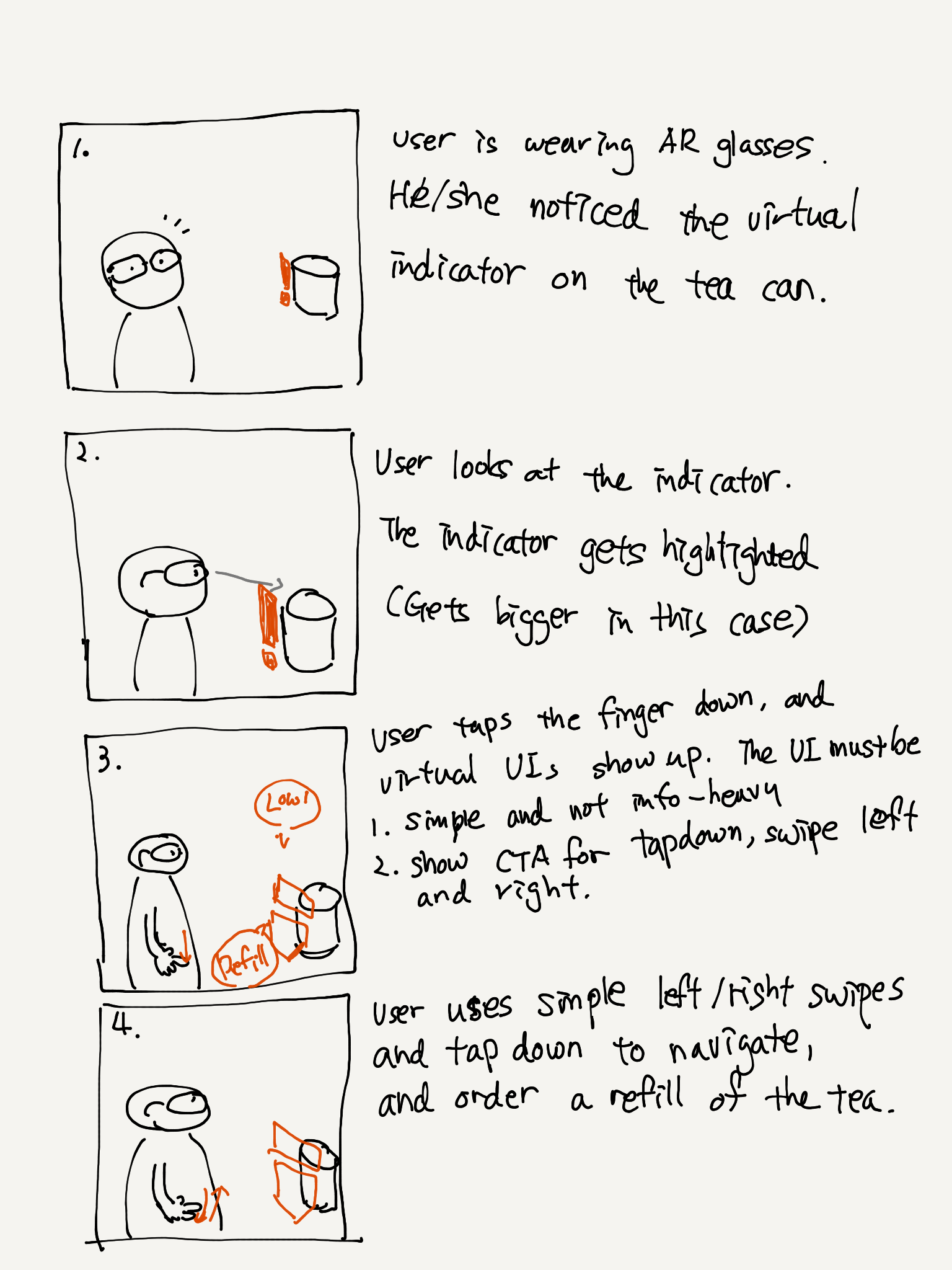

- I preserve my tea leaves in a container that can communicate with my AR glasses. When I'm running low on the tea, the container displays the indicator on the AR layer. I can walk up to the container, tap on the indicator to summon the virtual UI layer. From there, I can quickly order a refill by interacting with them.

- I want to turn off my smart lamp, which can be controlled with my hand gesture. I can raise my arm and point at the lamp and swipe downwards to turn it off.

In both cases, the user has to target the object he/she wants to interact with ( place the finger on the buttons on the virtual UI layer, aim at the lamp to turn off with the arm) through the gesture, and then execute the interaction. There are two noticeable problems with this model:

- Users have to be a lot more intentional with their gestures. The targeting stage is particularly challenging because they couldn't get haptic feedback to know whether they have successfully selected a target to interact with.

- The virtual UIs still have to rely on screen-based interaction models such as buttons within a pop-up dialog box. On top of the fact that it would be challenging to interact with them, this model can easily overpopulate virtual space with lots of small screens.

Can this interaction be more intuitive, fast, ubiquitous and 'magical'?

More importantly, how can we make the user the hero, not the product?

3. Just take a look

The new interaction model suggested in this research leverages the eye-tracking technology for targetting. This will allow the user to specify the object to interact with by just looking at it and execute the interaction with simple gestures with the arms down. Before jumping into user scenarios, I have made a few technical assumptions.

- The AR glasses that the user would wear have a reliable eye-tracking technology that tracks the direction and the depth.

- The user would be wearing a companion device that tracks the hand movements outside of the field of view of the AR glasses' camera or a technology that can deliver the equivalent experience.

Storyboard

Scenario video demo

Interaction prototype

This prototype has been built in Unity to create the testing environment that is close to the model suggested in the scenario and the demo video. Leap motion was used to for the gesture tracking.